Anatoly Reshetnikov is Assistant Professor of International Relations at Webster University in Vienna, Austria.

Xymena Kurowska is Associate Professor in the Department of International Relations at Central European University.

On August 10, 2021, Facebook published its latest report on what it calls, somewhat euphemistically, “coordinated inauthentic behavior.” The company revealed that, in July 2021, it had removed 144 Facebook accounts, 262 Instagram accounts, 13 Pages, and 8 Groups for being involved in coordinated campaigns aimed at manipulating public debate across Facebook-affiliated platforms. A large share of those (mostly fake) accounts were associated with the UK-registered marketing firm Fazze, whose operations were primarily conducted from Russia. The two campaigns orchestrated by Fazze in November-December 2020 and May 2021 were targeting India and Latin American countries. Their main aim was to undermine people’s trust in the newly developed coronavirus vaccines Pfizer and AstraZeneca.

Most notably, Facebook identified that, by their timing, the campaigns coincided “with periods when a number of governments, including in Latin America, India and the United States, were reportedly discussing the emergency authorizations for these respective vaccines.” What Facebook did not mention, however, is that Russia had its eye on India and Latin America (and specifically the latter’s largest countries, Argentina and Brazil) as desired markets for Sputnik V. What they also failed to emphasize is that December 2020 and May 2021 were the crucial stages in the promotion of Sputnik V in those countries.

As reported by the head of the Russian Direct Investment Fund, Kirill Dmitriev, Russia expected the registration of Sputnik V in Brazil in December 2020 and production in January. In Argentina, Sputnik V was the first vaccine that arrived — this happened in December 2020. Yet obstacles to delivering the second dose of the vaccine, which, by design, is different from the first one, as well as many more broken promises to other Latin American countries, were expected to (and did) create a backlash against the Russian vaccine. In India, the production of Sputnik V was first suggested by Putin, then approved by Russia’s Sovereign Wealth Fund in November 2020. At the beginning of May 2021, Sputnik shots were finally expected to hit the Indian market by the end of the month. What is more, India was supposed to become the largest production site for Sputnik V outside Russia, supplying 60% of all Russian-designed vaccines produced abroad. By mid-May 2021, India, Brazil and Argentina did, in fact, join the list of the largest producers of Sputnik V.

To us, this incident provides a good opportunity to recall and discuss the two coordinated political practices we conceptualized in recent articles: neutrollization and trickstery. Neutrollization is a defensive mechanism used occasionally by the Russian government, as well as various actors presumably affiliated with it, to shield the regime from criticism and exposure. It involves proliferating often bizarre alternative explanations for a given rule-breaking event of which Russia is being accused, such as the assassination of Boris Nemtsov in 2015 or the poisoning of Alexey Navalny in 2020. Together, these alternative versions crowd out genuine voices within civil society, undermining the possibility of meaningful debate. Rather than convincing the audience of one Kremlin-friendly version, neutrollization produces confusion and subsequent disengagement, which helps preserve plausible deniability.

Trickstery comes in many shapes and forms. It is both a shared cultural archetype and a situational script that political actors may utilize while interacting with their social environment. One contextual version of trickstery is overidentification. This tactic involves an overt endorsement of norms combined with their implicit subversion and defiance. An uninformed observer would normally not be able to tell if a trickster genuinely believes in the values to which it rhetorically subscribes, or is simply mocking them for its own benefit and/or pleasure — all of which undermines the system’s normative core. A thorough contextual interpretation usually reveals that the trickster is pursuing mockery for personal benefit. As we showed previously, Russia has used trickstery on many occasions, including in its advocacy for a new UN resolution on responsible state behavior in cyberspace in November 2018.

The design of the two Russia-linked disinformation campaigns exposed by Facebook reveals a peculiar mix of neutrollization and trickstery in action. On the one hand, the people who organized those campaigns attempted to breed confusion by proliferating both deceptively credible and frankly outlandish messages related to the alleged shortcomings of both vaccines. They also built comment threads populated mostly by members of their own troll networks — a practice used widely in the neutrollization campaign that followed Boris Nemtsov’s assassination.

On the other hand, in their coordinated behavior, campaign agents overidentified with (1) progressive liberal values staying away from anti-vax rhetoric, and (2) Western cultural heritage , which they used as a reference point for the cruder part of the first campaign. Both overidentifications make perfect sense in the context of Russia’s target audience and the country's implicit attempts to promote its own vaccine. Yet it is still difficult to shake off the sarcasm peeking through the deceptively ardent adherence to international procedures and analytical transparency at the core of Russian allegations against AstraZeneca and Pfizer. The dissonance is especially pronounced because it was precisely the lack of those two qualities — observance of international procedure and analytical transparency — that impaired broader global adoption of Sputnik V.

Starting with neutrollization, which was more prominent during the first campaign against AstraZeneca, Fazze created a multitude of misleading articles, posts, and petitions on various platforms like Change.org, Reddit, Medium, etc. and later used a network of fake Instagram and Facebook accounts to spread that content among authentic users. As the authors of the Facebook report note, the “campaign functioned as a disinformation laundromat.” The (brain)washing involved, however, did not rely on one coherent message. Instead, Fazze spread different messages: from deceptively adequate accusations of data manipulation during clinical trials (far left), to outlandish memes showing that AstraZeneca could turn people into chimpanzees, as chimpanzee genes were used to design the vaccine (second from left), to some odd combination of both (second from right). The posts were often followed by conversations in comment sections whose participants were connected to the campaign network (far right).

[gallery columns="4" ids="6957,6956,6955,6954"]

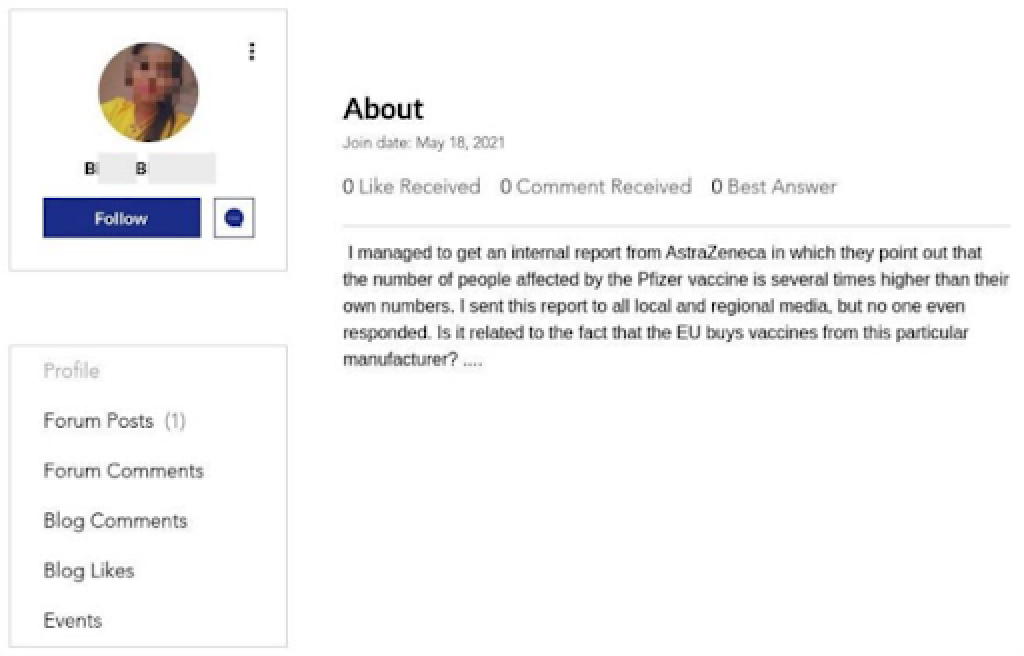

In May 2021, when it was Pfizer’s turn to withstand an attack, the strategy changed slightly because Fazze was trying to recruit authentic influencers to promote their message. While the message itself was much more coherent — a leaked AstraZeneca document was supposed to show that Pfizer caused three times more deaths than AZ — the instructions Fazze sent to its influencers included a visible neutrollization component. Namely, those delivering the message were supposed to appeal to citizens' critical faculties, to their intellectual capacity to question. The guidelines required that influencers conclude their videos or posts by asking: “Why some goverments actively purchasing Pfizer vaccine, which is dangerous to health of the people?” [original syntax and spelling preserved]. Thus, unlike more conventional propaganda that often tries to create false consciousness or “manufacture consent” (Herman and Chomsky 2002), neutrollization manufactures cynicism.

Trickstery, and specifically overidentification, was also manifest in both campaigns. As becomes clear from a Change.org petition created as part of the first campaign (against AZ), the plotters clearly tried to come off as arrant liberals. They appealed to the sense of global community, defended evidence-based medicine, and demanded transparency from both scholars and governments, while accusing AstraZeneca of data manipulation during clinical trials.

The Pfizer campaign had a very similar vibe, based on the alleged commitment to scientific transparency and the highest value of human life that had apparently been betrayed by those who were supposed to guard them, i.e. the EU (below). While in reality, Fazze offered compensation to influencers who agreed to post, onlookers may have easily parsed the motivation behind publicizing and sharing the leak as proceeding from individual responsibility and constant civic alertness, key qualities for the good citizen of liberal democracy. Ironically, it was precisely these two qualities that eventually compromised the campaign and exposed the whole network when several influencers in Germany and France blew the whistle.

The cruder part of the first campaign, which promoted the message that AstraZeneca could turn people into chimpanzees, also looked like the doings of a trickster. Shapeshifting undertones, slapstick humor, preposterous claims — all of these elements borrow liberally from the trickster’s playbook. The choice of discursive material is especially symptomatic. Without a doubt, the iconic 1968 movie Planet of the Apes used for producing the memes for the slander campaign brings us directly into the core of the Western (and global) popular culture. To remain intelligible in its cunning wrongdoing, the trickster must select cultural tropes with care, always ensuring that audiences relate to them immediately from within their own cultural contexts. With this principle in mind, it seems natural to choose a Western classic to crack a broad joke. How the joke lands, however, is never predetermined. The Cold-War-era and antimilitarist themes central to the original movie also have a part to play in its reception today.

Disinformation and trolling on digital platforms have been central to the conversation about undermining contemporary democracies. The technical means to trace “inauthentic behavior” have grown in sophistication, while the political urgency to expose and sanction has led to regulatory initiatives at domestic and global levels. Neutrollization and trickstery identify micro-mechanisms, social repertoires, and socio-political tactics that reflect greater patterns of interaction and stratification in contemporary (digital) international society. Dense networks of state and non-state actors become sites of struggle over symbolic and material capital globally. To trick and to neutrollize in such context is therefore hardly just a crude propagandistic move, and it may not be effective to counter it as such exclusively. Transnational efforts to regulate “inauthentic behavior” need to consider the complex origins and mechanisms of such practices. Assured denunciation is as likely to “feed the troll,” that is, become fodder for trolling, as it is to raise awareness about trolling.